Data centers make our digital lives possible. Each year they consume more than two percent of all power generated and cost an estimated $1.4 billion to keep them cool. In this post I’ll take a look at a novel idea which may bring that number down: underwater data centers.

Have you ever wondered how much energy it takes to store all of your cat pictures on the cloud? Better yet: have you ever wondered how much energy is required to store everyone’s cat pictures? You might be surprised. In 2014, data centers consumed about 70 billion kilowatt hours (kWh), or more than six times the annual power consumption of Washington, DC [1][10]. That’s a lot of cat memes being served.

The Terrestrial Data Center

Data centers are centralized locations that, at a very basic level, house racks of servers that store data and perform computations. They make possible bitcoin mining, real-time language translation, Netflix streaming, online video games, and processing of bank payments among many other things. These server farms range in size from a small closet using tens of kilowatts (kW) to Costco size warehouses requiring hundreds of megawatts (MW).

Data centers need a good deal of energy. Not just to power the servers, but also for auxiliary systems such as monitoring equipment, lighting, and most importantly: cooling. Computers rely on many, many, transistors which also act as resistors. When a current passes through a resistor, heat is generated – just like a toaster. If the heat is not removed it can lead to overheating, reducing the efficiency and lifetime of the processor, or even destroying it in extreme cases. Data centers face the same problem as a computer on a much larger scale.

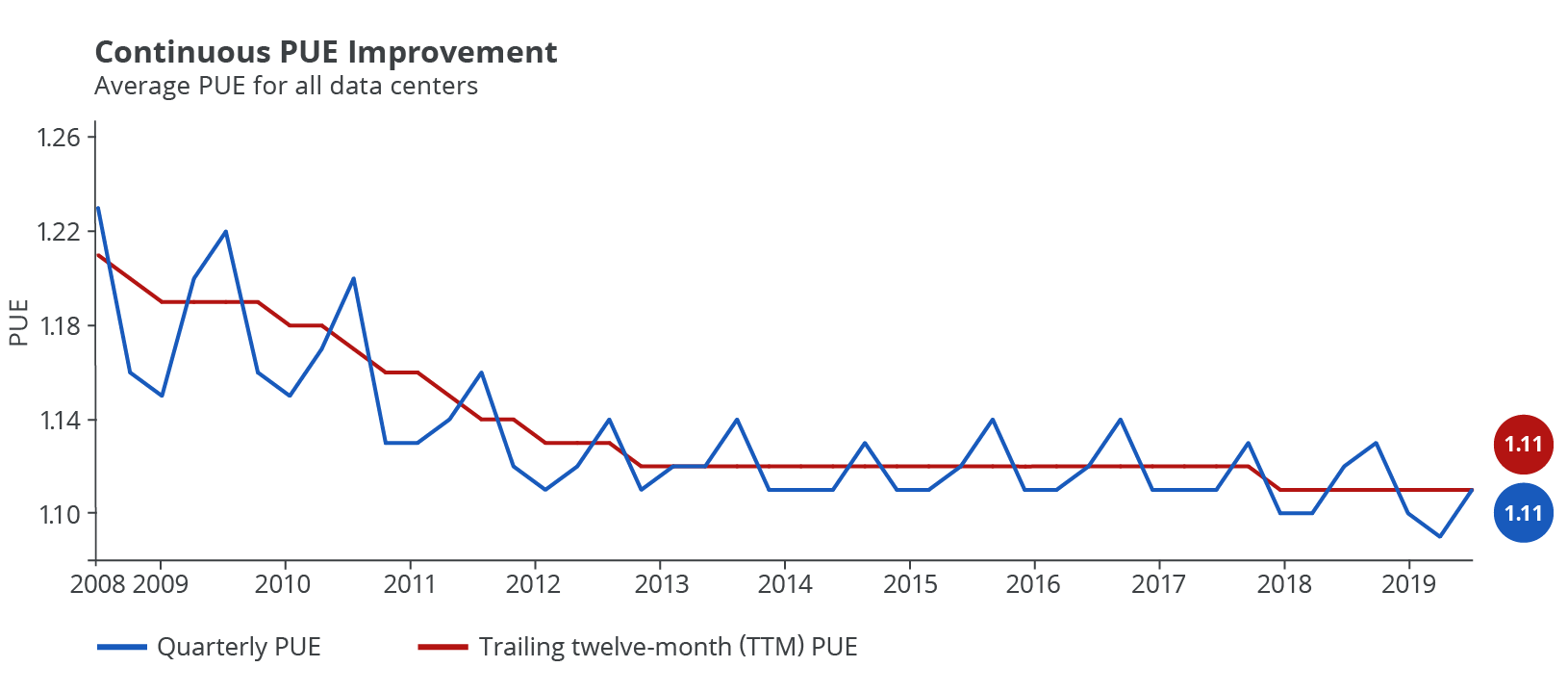

Data centers exist to store and manage data, so any power used by the facility for other purposes is considered ‘overhead’. A helpful metric way to measure a facility’s power overhead is with the power usage effectiveness (PUE) ratio. It is the ratio of the total facility power to the IT equipment power. A PUE of one would mean that the facility has zero power overhead whereas a PUE of 2 would mean that for every watt of IT power an additional watt is used for auxiliary systems.

When I say, “cloud computing”, the usual suspects of the tech world probably come to mind: Microsoft, Amazon Web Services (AWS), Facebook, and Google. These companies have PUEs on the order of 1.1 to 1.2. That’s actually pretty efficient. However, according to the Natural Resources Defense Council these enterprise level and high performance computing data centers account for only about 32 percent of the electricity used by data centers in the U.S. Roughly 49 percent goes to small and medium sized server rooms, which more than likely have less incentive to drive down their overhead since it’s a much smaller operating cost. According to an EPA report from 2007, a PUE of 2 might be more appropriate for these smaller server rooms, but let’s use a more conservative industry average PUE of 1.7 as proposed by the Uptime Institutes 2014 Data Center survey.

Google states that 30-70 percent of the overhead power is attributable to cooling for the average data center [2]. A PUE of 1.7 would mean that about 41 percent of total power is going to auxiliary systems for data centers. If half the auxiliary power goes to cooling, then this works out to roughly 14 billion kWh each year (using 2014 values from above) just to cool data centers, or an estimated $1.4 billion in electricity costs [4].

Wait, I thought this was a marine clean tech blog? I’m getting there. Most data centers are air cooled. Air cooling works moderately well, but not as well as water cooling. This is due to simple fact that water has a specific heat capacity that is more than four times that of air. In other words, water cooling is more efficient and better efficiency means less costs. Well, what if we could put these data centers underwater and cut down on that cooling load? That’s exactly what a team at Microsoft investigated.

The Underwater Data Center

Officially named Project Natick, it was a Microsoft skunkworks project from 2014-2016 to assess the feasibility of an underwater data center. One of the team members, Ben Cutler, was nice enough to hop on the phone with me and discuss the project.

The idea is not as crazy as it might sound. In fact, there are several benefits to an underwater data center. The first is one that we’ve already touched on: cooling. If you go deep enough (generally around 100 meters or more) the water temperature gets quite chilly, anywhere from just above freezing to around 60oF. This is more than sufficient to keep a server room cool, and more importantly it’s free! So long cooling bills.

For most underwater locations at depth the temperatures remain relatively constant year round. This brings us to another benefit: predictable ambient conditions. On shore, data facilities must deal with fluctuating temperatures across the seasons; ambient temperatures can vary over a range of more than a 100oF in a single year. This leads to over-designed cooling systems. Underwater, the system has to deal with a much more narrow range of temperatures, perhaps around 10oF.

Another benefit of underwater data centers is their inherent location. By one account, close to 50 percent of the U.S population will live near the coastline by 2020 [5]. Now, where would you want to be to have the highest concentration of customers? However, coastal property values are notoriously expensive so large facilities such as data centers and warehouses are moved farther inland where land is generally cheaper. However, underwater “land” can be even cheaper. For example, Deepwater Wind paid only $3 per acre back in 2013 to lease a large block of seafloor off the coast of Rhode Island [7]. For comparison, agriculture land in the U.S. rents for about $144 per acre, or forty-eight times more.

There is another benefit to being close to the customer: it reduces latency. This is what causes websites to load slowly. More simply, it’s the time it takes for a packet of data to move from its origin to its destination [6]. By one rule of thumb, every 100 kilometers of travel leads to about one millisecond in latency. This may not sound like much, but it adds up and no one likes waiting for their Game of Thrones episode.

Perhaps the most important aspect of an underwater data center would be its short deployment time. According to Ben they believe they can deploy their underwater server pods in about 3 months. Considering that a large data center takes about two years to construct, this is a HUGE improvement. With such a design, data center developers could add capacity in a fraction of the time.

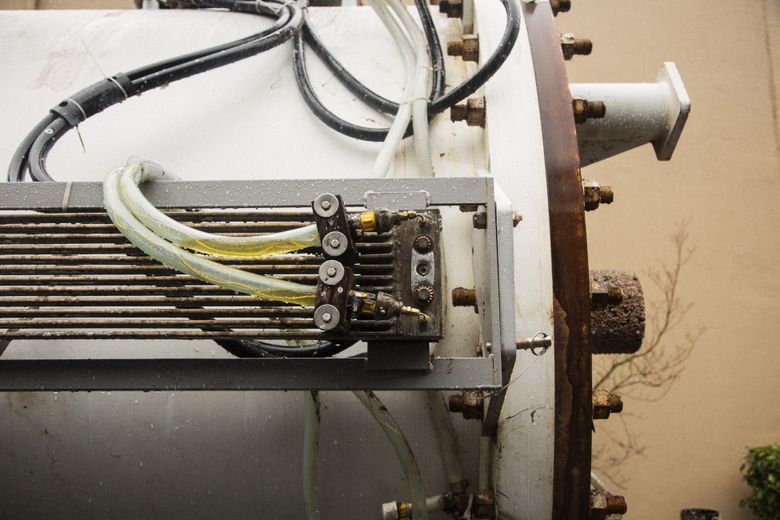

So how does an underwater data center work? The design that the Project Natick team came up with is pretty straightforward and quite similar to some of the designs I talk about in my post on ocean energy storage. Essentially they crammed a small server rack into a giant steel vessel and then sealed it up. The magic happens once it’s underwater resting gently on the seafloor. The vessel has two different heat exchangers that use freshwater as the working fluid. An internal heat exchanger absorbs heat from the hot electronics and the external heat exchanger rejects that heat into the surrounding cold seawater. It’s as simple as that.

Of course, installing and maintaining anything in the ocean is never simple. Accessing a sealed pod deep underwater to change out sensitive electronics would be enough to scare off any maintenance technician. Then there is always the omnipresent problem of biofouling. It’s a simple law of life that if a device remains within the photic zone, little critters are going to flock to it. As more barnacles and algae latch onto the pod, it reduces the effectiveness of heat transfer.

Too much biogrowth and, ironically, the pod might have difficulty cooling. The guys at Microsoft used a common technique in the maritime sector to sidestep this issue by using Copper-Nickel alloys on the heat exchanger surfaces. Cu-Ni is a good heat exchanger and corrosion resistant, but more importantly it is also naturally resistant to biofouling [9]. Unfortunately, it is also more expensive than steel so this may not be a long-term solution.

So where does the power for such a device come from? The same logic that applies to siting data centers applies to siting power: be close to the customer. Ideally, underwater data centers would be co-located with marine renewable power sources, such as tidal turbines, wave energy converters, or offshore wind. This would cut down on infrastructure and operating costs for the combined system.

Marine renewables face two hurdles. First is that current technology has not reached cost parity with solar or wind

for most locations, which makes the economics hard to justify on price alone. Second is a large underwater data center would require significant power, on the order of 100 MW or more. There are no marine renewable projects that have this amount of capacity. In in the near term, it is unlikely we’ll see massive data centers underwater like the ones we see on shore.

Underwater data centers are a novel idea, but not so far-fetched. They have a number of advantages over terrestrial facilities, the most important being a reduced cooling cost and a faster deployment time. As the population continues to grow and store an ever-increasing amount of cat pictures, developers like AWS, Facebook, Microsoft, and Google may look to the ocean for new data center locations.

Huge thank you to Ben Cutler at Microsoft for helping me out with this post. More information about Project Natick can be found here: http://natick.research.microsoft.com/

References

[1] Shehabi, A., Smith, S.J., Horner, N., Azevedo, I., Brown, R., Koomey, J., Masanet, E., Sartor, D., Herrlin, M., Lintner, W. 2016. United States Data Center Energy Usage Report. Lawrence Berkeley National Laboratory, Berkeley, California. LBNL-1005775

[2] Natural Resources Defense Council. 2014. America’s Data Centers Are Wasting Huge Amounts of Energy. New York, NY. www.nrdc.org/energy/data-center-efficiency-assessment.asp

[3] Google Data Centers. Efficiency: How we do it. https://www.google.com/about/datacenters/efficiency/internal/

[4] U.S. Energy Information Administration. Electric Power Monthly. https://www.eia.gov/electricity/monthly/epm_table_grapher.php?t=epmt_5_6_a

[5] National Ocean Service. What percentage of the American population lives near the coast?. https://oceanservice.noaa.gov/facts/population.html

[6] Cloudways. How Server Location Affects Latency? https://www.cloudways.com/blog/how-server-location-affects-latency/

[7] United States Department of the Interior Bureau of Ocean Energy Management. Commercial Lease of Submerged Lands for Renewable Energy Development on the Outer Continental Shelf. https://www.boem.gov/Renewable-Energy-Program/State-Activities/RI/Executed-Lease-OCS-A-0486.aspx

[8] National Agricultural Statistics Service Highlights. 2015 Agricultural Land: Land Values and Cash Rents. https://www.nass.usda.gov/Publications/Highlights/2015_LandValues_CashRents/2015LandValuesCashRents_FINAL.pdf

[9] Copper Development Association, Inc. Cu-Ni Alloy Resistance to Corrosion and Biofouling. https://www.copper.org/applications/marine/cuni/properties/biofouling/resistance_to_corrosion_and_biofouling.html

[10] U.S. Department of Energy. Washington, DC Energy Sector Risk Profile. https://www.hsdl.org/?view&did=768044